Monitor LLM performance and usage

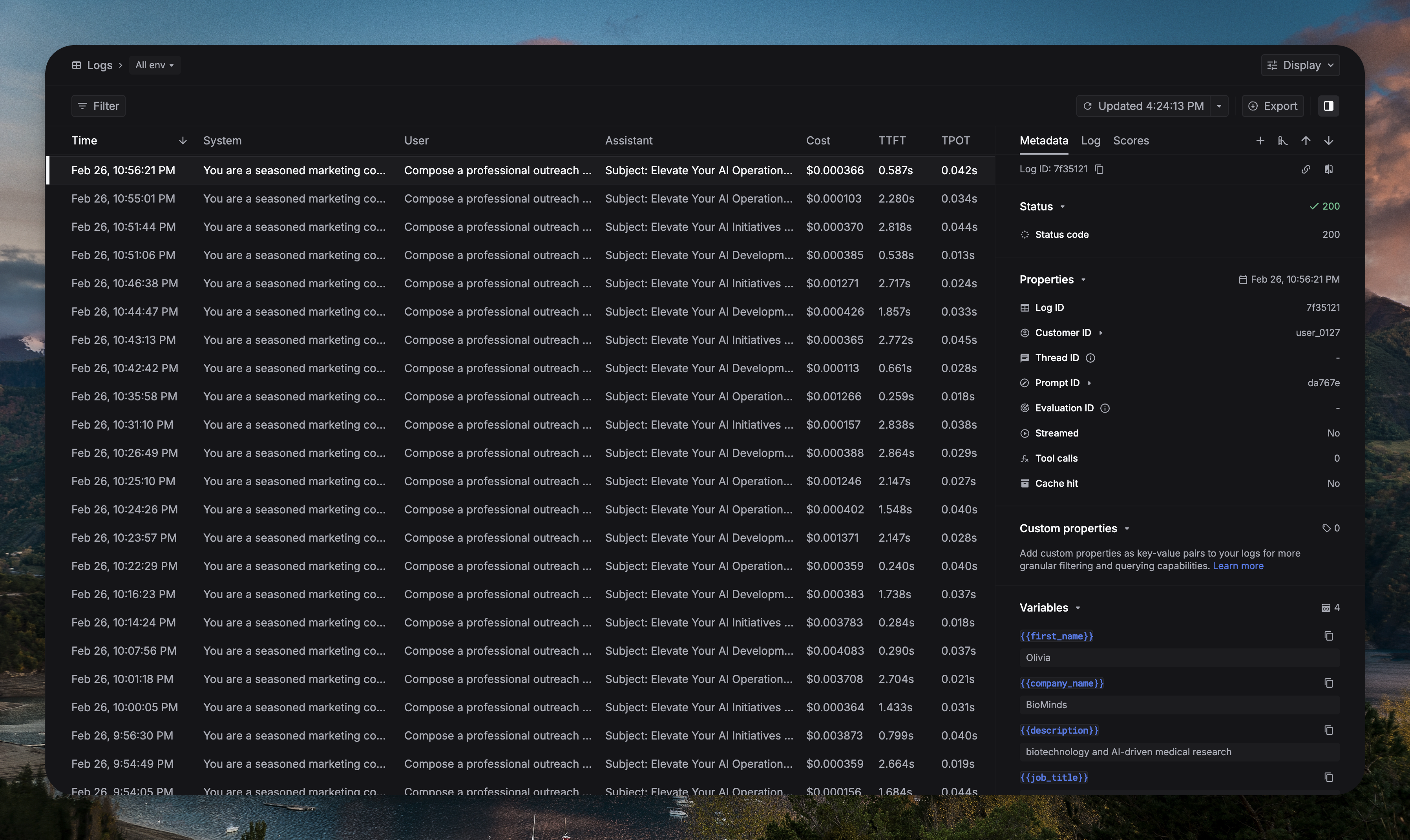

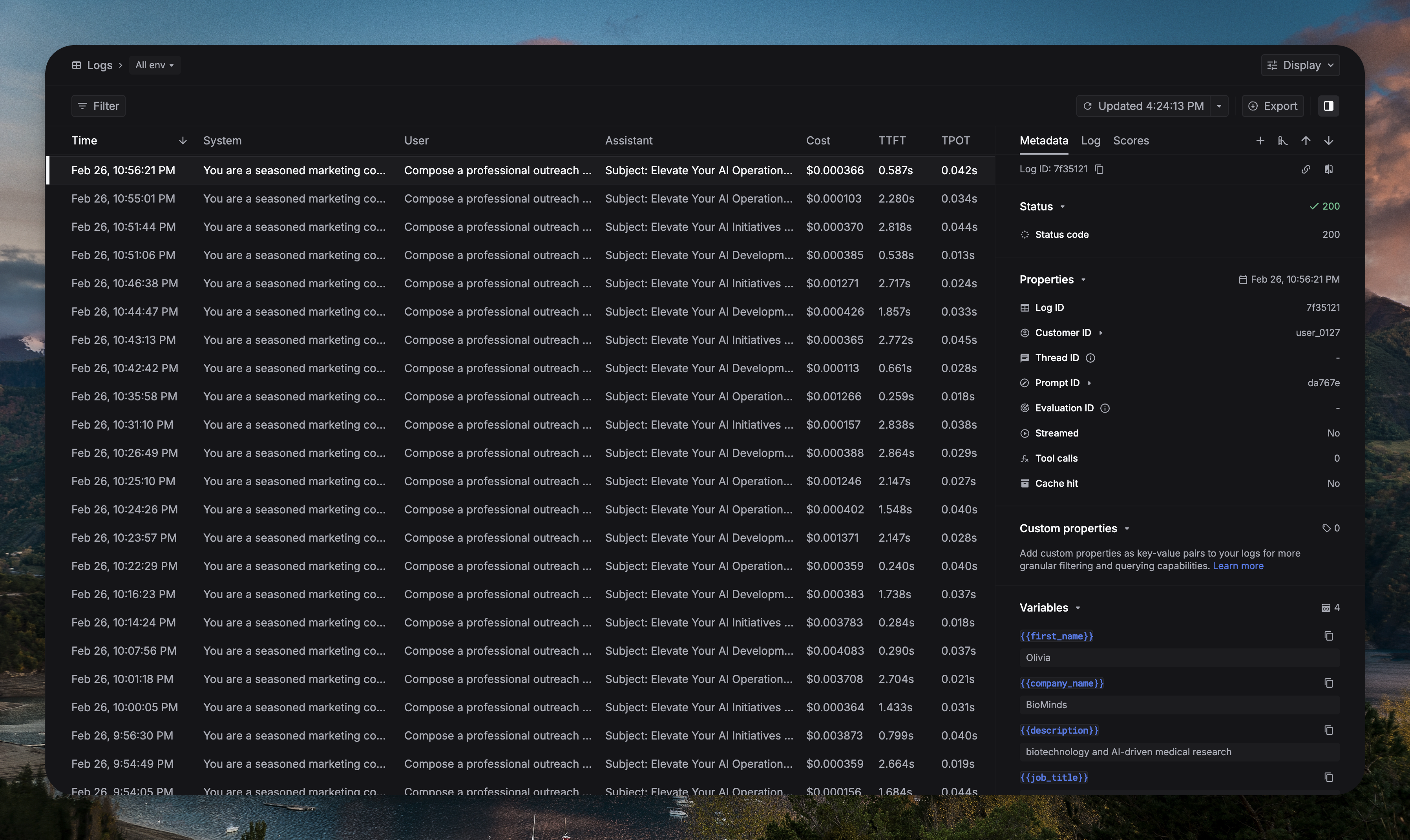

After you set up the environment and run the request, you can see LLM logs in Keywords AI.

Facing issues? Reach out to our engineering team at support@linkup.so or via our Discord or book a 15 minutes call with a member of our technical team.